Co-Localization of Audio Sources in Images Using Binaural Features and Locally-Linear Regression

Résumé

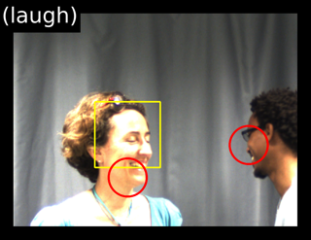

This paper addresses the problem of localizing audio sources using binaural measurements. We propose a supervised formulation that simultaneously localizes multiple sources at different locations. The approach is intrinsically efficient because, contrary to prior work, it relies neither on source separation, nor on monaural segregation. The method starts with a training stage that establishes a locally-linear Gaussian regression model between the directional coordinates of all the sources and the auditory features extracted from binaural measurements. While fixed-length wide-spectrum sounds (white noise) are used for training to reliably estimate the model parameters, we show that the testing (localization) can be extended to variable-length sparse-spectrum sounds (such as speech), thus enabling a wide range of realistic applications. Indeed, we demonstrate that the method can be used for audio-visual fusion, namely to map speech signals onto images and hence to spatially align the audio and visual modalities, thus enabling to discriminate between speaking and non-speaking faces. We release a novel corpus of real-room recordings that allow quantitative evaluation of the co-localization method in the presence of one or two sound sources. Experiments demonstrate increased accuracy and speed relative to several state-of-the-art methods.

Fichier principal

submission_taslp14F.pdf (13.62 Mo)

Télécharger le fichier

submission_taslp14F.pdf (13.62 Mo)

Télécharger le fichier

twosources_ni5.png (110.84 Ko)

Télécharger le fichier

twosources_ni5.png (110.84 Ko)

Télécharger le fichier

| Origine | Fichiers produits par l'(les) auteur(s) |

|---|

| Format | Figure, Image |

|---|---|

| Origine | Fichiers produits par l'(les) auteur(s) |

Loading...