JellyLens: Content-Aware Adaptive Lenses

Résumé

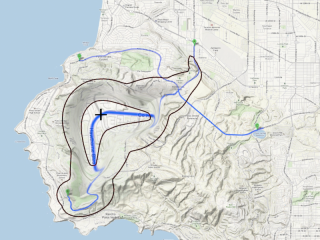

Focus+context lens-based techniques smoothly integrate two levels of detail using spatial distortion to connect the magnified region and the context. Distortion guarantees visual continuity, but causes problems of interpretation and focus targeting, partly due to the fact that most techniques are based on statically-defined, regular lens shapes, that result in far-from-optimal magnification and distortion. JellyLenses dynamically adapt to the shape of the objects of interest, providing detail-in-context visualizations of higher relevance by optimizing what regions fall into the focus, context and spatially-distorted transition regions. This both improves the visibility of content in the focus region and preserves a larger part of the context region. We describe the approach and its implementation, and report on a controlled experiment that evaluates the usability of JellyLenses compared to regular fisheye lenses, showing clear performance improvements with the new technique for a multi-scale visual search task.

Domaines

Interface homme-machine [cs.HC]

Fichier principal

jelly-lenses-hal.pdf (1.91 Mo)

Télécharger le fichier

jelly-lenses-hal.pdf (1.91 Mo)

Télécharger le fichier

ftc.png (716.06 Ko)

Télécharger le fichier

jelly-lenses-UIST2012.mov (37.78 Mo)

Télécharger le fichier

ftc.png (716.06 Ko)

Télécharger le fichier

jelly-lenses-UIST2012.mov (37.78 Mo)

Télécharger le fichier

| Origine | Fichiers produits par l'(les) auteur(s) |

|---|

| Format | Figure, Image |

|---|