Learning structured prediction models for interactive image labeling

Résumé

We propose structured models for image labeling that take into account the dependencies among the image labels explicitly. These models are more expressive than independent label predictors, and lead to more accurate predictions. While the improvement is modest for fully-automatic image labeling, the gain is significant in an interactive scenario where a user provides the value of some of the image labels. Such an interactive scenario offers an interesting trade-off between accuracy and manual labeling effort. The structured models are used to decide which labels should be set by the user, and transfer the user input to more accurate predictions on other image labels. We also apply our models to attribute-based image classification, where attribute predictions of a test image are mapped to class probabilities by means of a given attribute-class mapping. In this case the structured models are built at the attribute level. We also consider an interactive system where the system asks a user to set some of the attribute values in order to maximally improve class prediction performance. Experimental results on three publicly available benchmark data sets show that in all scenarios our structured models lead to more accurate predictions, and leverage user input much more effectively than state-of-the-art independent models.

Fichier principal

0300.web.pdf (477.32 Ko)

Télécharger le fichier

0300.web.pdf (477.32 Ko)

Télécharger le fichier

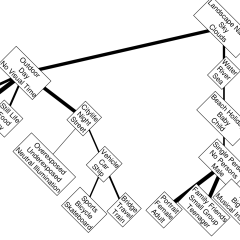

tree4.png (180.85 Ko)

Télécharger le fichier

tree4.png (180.85 Ko)

Télécharger le fichier

| Origine | Fichiers produits par l'(les) auteur(s) |

|---|

| Format | Figure, Image |

|---|

Loading...