Hyperfeatures - multilevel local coding for visual recognition

Résumé

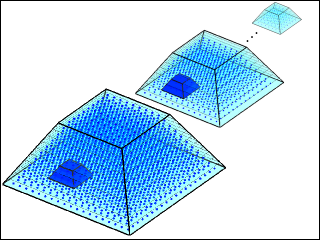

Histograms of local appearance descriptors are a popular representation for visual recognition. They are highly discriminant and have good resistance to local occlusions and to geometric and photometric variations, but they are not able to exploit spatial co-occurrence statistics at scales larger than their local input patches. We present a new multilevel visual representation, ‘hyperfeatures', that is designed to remedy this. The starting point is the familiar notion that to detect object parts, in practice it often suffices to detect co-occurrences of more local object fragments – a process that can be formalized as comparison (e.g. vector quantization) of image patches against a codebook of known fragments, followed by local aggregation of the resulting codebook membership vectors to detect co-occurrences. This process converts local collections of image descriptor vectors into somewhat less local histogram vectors – higher-level but spatially coarser descriptors. We observe that as the output is again a local descriptor vector, the process can be iterated, and that doing so captures and codes ever larger assemblies of object parts and increasingly abstract or ‘semantic' image properties. We formulate the hyperfeatures model and study its performance under several different image coding methods including clustering based Vector Quantization, Gaussian Mixtures, and combinations of these with Latent Dirichlet Allocation. We find that the resulting high-level features provide improved performance in several object image and texture image classification tasks.

Fichier principal

Agarwal-Triggs-eccv06.pdf (3.25 Mo)

Télécharger le fichier

Agarwal-Triggs-eccv06.pdf (3.25 Mo)

Télécharger le fichier

hyperlevels.png (129.82 Ko)

Télécharger le fichier

Agarwal-eccv06-talk.pdf (5.59 Mo)

Télécharger le fichier

hyperlevels.png (129.82 Ko)

Télécharger le fichier

Agarwal-eccv06-talk.pdf (5.59 Mo)

Télécharger le fichier

| Origine | Fichiers éditeurs autorisés sur une archive ouverte |

|---|

| Format | Figure, Image |

|---|

| Format | Autre |

|---|