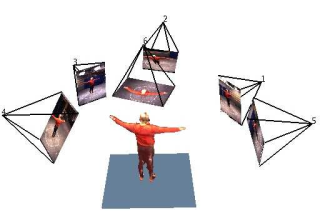

Omnidirectional texturing of human actors from multiple view video sequences

Résumé

In 3D video, recorded object behaviors can be observed from any viewpoint, because the 3D video registers the object's 3D shape and color. However, the real-world views are limited to the views from a number of cameras, so only a coarse model of the object can be recovered in real-time. It becomes then necessary to judiciously texture the object with images recovered from the cameras. One of the problems in multi-texturing is to decide what portion of the 3D model is visible from what camera. We propose a texture-mapping algorithm that tries to bypass the problem of exactly deciding if a point is visible or not from a certain camera. Given more than two color values for each pixel, a statistical test allows to exclude outlying color data before blending.

Fichier principal

RoCHI.pdf (132.24 Ko)

Télécharger le fichier

RoCHI.pdf (132.24 Ko)

Télécharger le fichier

RoCHI_Page_4_Image_0001.jpg (16.4 Ko)

Télécharger le fichier

RoCHI_Page_4_Image_0001.jpg (16.4 Ko)

Télécharger le fichier

| Origine | Fichiers produits par l'(les) auteur(s) |

|---|

| Format | Figure, Image |

|---|

Loading...