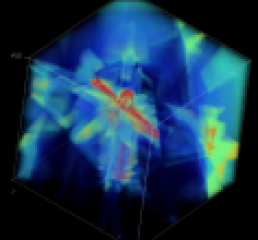

Fusion of Multi-View Silhouette Cues Using a Space Occupancy Grid

Résumé

In this report, we investigate what can be inferred from several silhouette probability maps, in multi-camera environments. To this aim, we propose a new framework for multi-view silhouette cue fusion. This framework uses a space occupancy grid as a dense probabilistic 3D representation of scene contents. Such a representation is of great interest for various computer vision applications in perception, or localization for instance. Our main contribution is to introduce the occupancy grid concept, popular in the robotics community, for multi-camera environments. The idea is to consider each camera pixel as a statistical occupancy sensor. All pixel observations are then used jointly to infer where, and how likely, matter is present in the scene. As our results illustrate, this simple model has various advantages. Most sources of uncertainty are explicitly modeled, and no premature decisions about pixel labeling occur preserving therefore pixel knowledge. Consequently, scene object localization and robust volume reconstruction can be achieved, with no constraint on camera placement and object visibility. In addition, it is possible to compute improved consistent silhouettes in original views using this representation.

Fichier principal

RR-5551.pdf (965.52 Ko)

Télécharger le fichier

RR-5551.pdf (965.52 Ko)

Télécharger le fichier

SilhouetteFusion.png (19.64 Ko)

Télécharger le fichier

SilhouetteCueFusion.avi (5.02 Mo)

Télécharger le fichier

SilhouetteFusion.png (19.64 Ko)

Télécharger le fichier

SilhouetteCueFusion.avi (5.02 Mo)

Télécharger le fichier

| Format | Figure, Image |

|---|

| Format | Vidéo |

|---|