LP-based policies for restless bandits: necessary and sufficient conditions for (exponentially fast) asymptotic optimality

Résumé

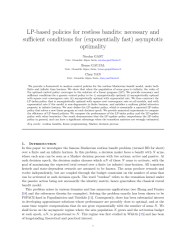

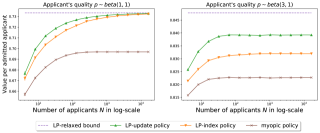

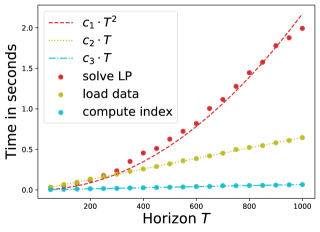

We provide a framework to analyse control policies for the restless Markovian bandit model, under both finite and infinite time horizon. We show that when the population of arms goes to infinity, the value of the optimal control policy converges to the solution of a linear program (LP). We provide necessary and sufficient conditions for a generic control policy to be: i) asymptotically optimal; ii) asymptotically optimal with square root convergence rate; iii) asymptotically optimal with exponential rate. We then construct the LP-index policy that is asymptotically optimal with square root convergence rate on all models, and with exponential rate if the model is non-degenerate in finite horizon, and satisfies a uniform global attractor property in infinite horizon. We next define the LP-update policy, which is essentially a repeated LP-index policy that solves a new linear program at each decision epoch. We provide numerical experiments to compare the efficiency of LP-based policies. We compare the performance of the LP-index policy and the LP-update policy with other heuristics. Our result demonstrates that the LP-update policy outperforms the LP-index policy in general, and can have a significant advantage when the transition matrices are wrongly estimated.

Fichier principal

LP_finite_infinite.pdf (687.85 Ko)

Télécharger le fichier

LP_finite_infinite.pdf (687.85 Ko)

Télécharger le fichier

candidate_selection_all_data.pdf (25.38 Ko)

Télécharger le fichier

candidate_selection_all_data.pdf (25.38 Ko)

Télécharger le fichier

complexity1.pdf (17.43 Ko)

Télécharger le fichier

complexity1.pdf (17.43 Ko)

Télécharger le fichier

complexity2.pdf (17.14 Ko)

Télécharger le fichier

complexity2.pdf (17.14 Ko)

Télécharger le fichier

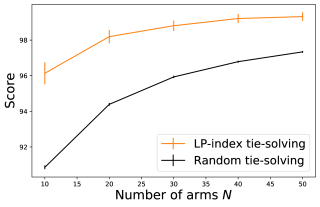

tie-solving.pdf (16.01 Ko)

Télécharger le fichier

tie-solving.pdf (16.01 Ko)

Télécharger le fichier

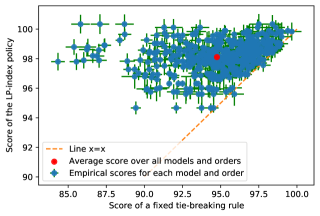

tie-solving_fixedVSlpindex.pdf (39.55 Ko)

Télécharger le fichier

tie-solving_fixedVSlpindex.pdf (39.55 Ko)

Télécharger le fichier

| Format | Figure, Image |

|---|---|

| Origine | Fichiers produits par l'(les) auteur(s) |

| Format | Figure, Image |

|---|

| Format | Figure, Image |

|---|

| Format | Figure, Image |

|---|

| Format | Figure, Image |

|---|