Bayesian View Synthesis and Image-Based Rendering Principles

Résumé

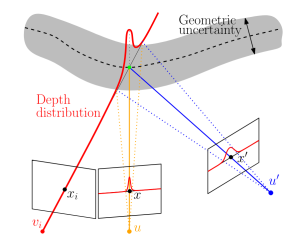

In this paper, we address the problem of synthesizing novel views from a set of input images. State of the art methods, such as the Unstructured Lumigraph, have been using heuristics to combine information from the original views, often using an explicit or implicit approximation of the scene geometry. While the proposed heuristics have been largely explored and proven to work effectively, a Bayesian formulation was recently introduced, formalizing some of the previously proposed heuristics, pointing out which physical phenomena could lie behind each. However, some important heuristics were still not taken into account and lack proper formalization. We contribute a new physics-based generative model and the corresponding Maximum a Posteriori estimate, providing the desired unification between heuristics-based methods and a Bayesian formulation. The key point is to systematically consider the error induced by the uncertainty in the geometric proxy. We provide an extensive discussion, analyzing how the obtained equations explain the heuristics developed in previous methods. Furthermore, we show that our novel Bayesian model significantly improves the quality of novel views, in particular if the scene geometry estimate is inaccurate.

Fichier principal

BayesianViewSyntehsis_PUJADES_CVPR14.pdf (211.74 Ko)

Télécharger le fichier

BayesianViewSyntehsis_PUJADES_CVPR14.pdf (211.74 Ko)

Télécharger le fichier

BayesianPreviz (1).png (100.15 Ko)

Télécharger le fichier

366_PUJADES_SupplementaryMaterial_final.pdf (43.65 Mo)

Télécharger le fichier

BayesianPreviz.png (100.15 Ko)

Télécharger le fichier

poster_BVS_IBRP.pdf (4.1 Mo)

Télécharger le fichier

video-spotlight-O4F4.mp4 (9.26 Mo)

Télécharger le fichier

BayesianPreviz (1).png (100.15 Ko)

Télécharger le fichier

366_PUJADES_SupplementaryMaterial_final.pdf (43.65 Mo)

Télécharger le fichier

BayesianPreviz.png (100.15 Ko)

Télécharger le fichier

poster_BVS_IBRP.pdf (4.1 Mo)

Télécharger le fichier

video-spotlight-O4F4.mp4 (9.26 Mo)

Télécharger le fichier

| Origine | Fichiers produits par l'(les) auteur(s) |

|---|

| Format | Figure, Image |

|---|

| Format | Autre |

|---|

| Format | Autre |

|---|

| Format | Vidéo |

|---|---|

| Origine | Fichiers produits par l'(les) auteur(s) |

Loading...