Expanded Parts Model for Human Attribute and Action Recognition in Still Images

Résumé

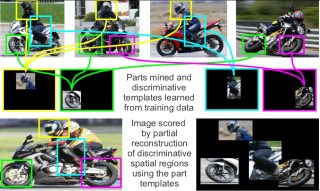

We propose a new model for recognizing human attributes (e.g. wearing a suit, sitting, short hair) and actions (e.g. running, riding a horse) in still images. The proposed model relies on a collection of part templates which are learnt discriminatively to explain specific scale-space locations in the images (in human centric coordinates). It avoids the limitations of highly structured models, which consist of a few (i.e. a mixture of) 'average' templates. To learn our model, we propose an algorithm which automatically mines out parts and learns corresponding discriminative templates with their respective locations from a large number of candidate parts. We validate the method on recent challenging datasets: (i) Willow 7 actions [7], (ii) 27 Human Attributes (HAT) [25], and (iii) Stanford 40 actions [37]. We obtain convincing qualitative and state-of-the-art quantitative results on the three datasets.

Fichier principal

sharma_epm_cvpr2013.pdf (2.48 Mo)

Télécharger le fichier

sharma_epm_cvpr2013.pdf (2.48 Mo)

Télécharger le fichier

epm_iilus_icon.jpg (204.23 Ko)

Télécharger le fichier

epm_iilus_icon.jpg (204.23 Ko)

Télécharger le fichier

| Origine | Fichiers produits par l'(les) auteur(s) |

|---|

| Format | Figure, Image |

|---|