3D-Audio Matting, Post-editing and Re-rendering from Field Recordings

Résumé

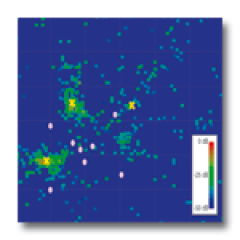

We present a novel approach to real-time spatial rendering of realistic auditory environments and sound sources recorded live, in the field. Using a set of standard microphones distributed throughout a real-world environment, we record the sound field simultaneously from several locations. After spatial calibration, we segment from this set of recordings a number of auditory components, together with their location. We compare existing time delay of arrival estimation techniques between pairs of widely spaced microphones and introduce a novel efficient hierarchical localization algorithm. Using the high-level representation thus obtained, we can edit and rerender the acquired auditory scene over a variety of listening setups. In particular, we can move or alter the different sound sources and arbitrarily choose the listening position. We can also composite elements of different scenes together in a spatially consistent way. Our approach provides efficient rendering of complex soundscapes which would be challenging to model using discrete point sources and traditional virtual acoustics techniques. We demonstrate a wide range of possible applications for games, virtual and augmented reality, and audio visual post production.

Fichier principal

eurasipJASP07.pdf (667.58 Ko)

Télécharger le fichier

eurasipJASP07.pdf (667.58 Ko)

Télécharger le fichier

screen.gif (9.41 Ko)

Télécharger le fichier

screen.gif (9.41 Ko)

Télécharger le fichier

| Origine | Fichiers produits par l'(les) auteur(s) |

|---|

| Format | Figure, Image |

|---|

Loading...