Learning to Track 3D Human Motion from Silhouettes

Résumé

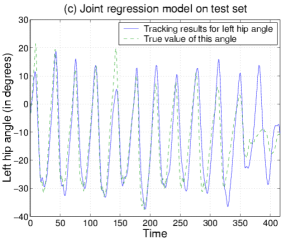

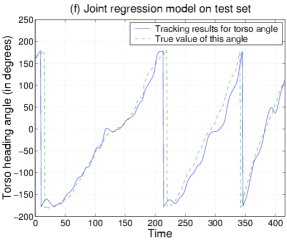

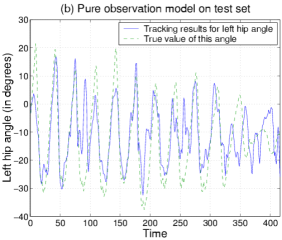

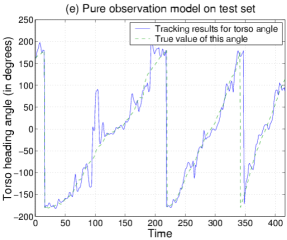

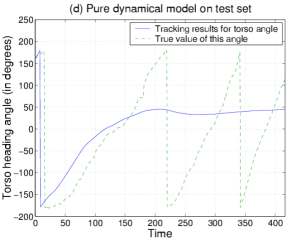

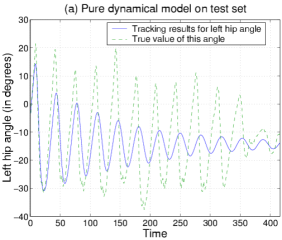

This paper describes a sparse Bayesian regression method for recovering 3D human body motion directly from silhouettes extracted from monocular video sequences. No detailed body shape model is needed, and realism is ensured by training on real human motion capture data. The tracker estimates 3D body pose by using Relevance Vector Machine regression to combine a learned autoregressive dynamical model with robust shape descriptors extracted automatically from image silhouettes. We studied several different combination methods, the most effective being to learn a nonlinear observation-update correction based on joint regression with respect to the predicted state and the observations. We demonstrate the method on a 54-parameter full body pose model, both quantitatively using motion capture based test sequences, and qualitatively on a test video sequence.

Fichier principal

Agarwal-icml04.pdf (1.53 Mo)

Télécharger le fichier

Agarwal-icml04.pdf (1.53 Mo)

Télécharger le fichier

01graph4a.png (38.33 Ko)

Télécharger le fichier

01graph4a.png (38.33 Ko)

Télécharger le fichier

02graph4a.png (26.72 Ko)

Télécharger le fichier

02graph4a.png (26.72 Ko)

Télécharger le fichier

03graph4a.png (37.44 Ko)

Télécharger le fichier

03graph4a.png (37.44 Ko)

Télécharger le fichier

04graph4a.png (28.71 Ko)

Télécharger le fichier

04graph4a.png (28.71 Ko)

Télécharger le fichier

05graph4a.png (24.43 Ko)

Télécharger le fichier

05graph4a.png (24.43 Ko)

Télécharger le fichier

06graph4a.png (32.78 Ko)

Télécharger le fichier

video11icml.avi (4.3 Mo)

Télécharger le fichier

video18icml.avi (439.35 Ko)

Télécharger le fichier

06graph4a.png (32.78 Ko)

Télécharger le fichier

video11icml.avi (4.3 Mo)

Télécharger le fichier

video18icml.avi (439.35 Ko)

Télécharger le fichier

| Origine | Fichiers produits par l'(les) auteur(s) |

|---|

| Format | Figure, Image |

|---|

| Format | Figure, Image |

|---|

| Format | Figure, Image |

|---|

| Format | Figure, Image |

|---|

| Format | Figure, Image |

|---|

| Format | Figure, Image |

|---|

| Format | Autre |

|---|

| Format | Autre |

|---|

Loading...