Action Recognition from Arbitrary Views using 3D Exemplars

Résumé

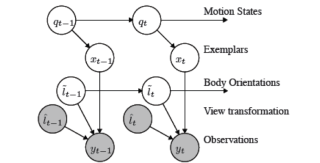

In this paper, we address the problem of learning compact, view-independent, realistic 3D models of human actions recorded with multiple cameras, for the purpose of recognizing those same actions from a single or few cameras, without prior knowledge about the relative orientations between the cameras and the subjects. To this aim, we propose a new framework where we model actions using three dimensional occupancy grids, built from multiple viewpoints, in an exemplar-based HMM. The novelty is, that a 3D reconstruction is not required during the recognition phase, instead learned 3D exemplars are used to produce 2D image information that is compared to the observations. Parameters that describe image projections are added as latent variables in the recognition process. In addition, the temporal Markov dependency applied to view parameters allows them to evolve during recognition as with a smoothly moving camera. The effectiveness of the framework is demonstrated with experiments on real datasets and with challenging recognition scenarios.

Fichier principal

WBR07.pdf (678.19 Ko)

Télécharger le fichier

WBR07.pdf (678.19 Ko)

Télécharger le fichier

WBR07.png (120.55 Ko)

Télécharger le fichier

WBR07.png (120.55 Ko)

Télécharger le fichier

| Origine | Fichiers produits par l'(les) auteur(s) |

|---|

| Format | Figure, Image |

|---|

Loading...