Neural Human Deformation Transfer

Résumé

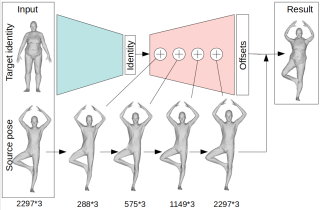

We consider the problem of human deformation transfer, where the goal is to retarget poses between different characters. Traditional methods that tackle this problem assume a human pose model to be available and transfer poses between characters using this model. In this work, we take a different approach and transform the identity of a character into a new identity without modifying the character's pose. This offers the advantage of not having to define equivalences between 3D human poses, which is not straightforward as poses tend to change depending on the identity of the character performing them, and as their meaning is highly contextual. To achieve the deformation transfer, we propose a neural encoder-decoder architecture where only identity information is encoded and where the decoder is conditioned on the pose. We use pose independent representations, such as isometryinvariant shape characteristics, to represent identity features. Our model uses these features to supervise the prediction of offsets from the deformed pose to the result of the transfer. We show experimentally that our method outperforms state-of-the-art methods both quantitatively and qualitatively, and generalises better to poses not seen during training. We also introduce a fine-tuning step that allows to obtain competitive results for extreme identities, and allows to transfer simple clothing.

Fichier principal

Neural Human Deformation Transfer.pdf (2 Mo)

Télécharger le fichier

Neural Human Deformation Transfer.pdf (2 Mo)

Télécharger le fichier

architecture.png (190.45 Ko)

Télécharger le fichier

Neural Human Deformation Transfer.mp4 (11.47 Mo)

Télécharger le fichier

architecture.png (190.45 Ko)

Télécharger le fichier

Neural Human Deformation Transfer.mp4 (11.47 Mo)

Télécharger le fichier

| Origine | Fichiers produits par l'(les) auteur(s) |

|---|

| Format | Figure, Image |

|---|

| Format | Vidéo |

|---|