Joint learning of object and action detectors

Résumé

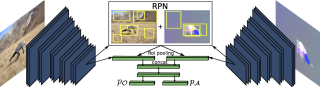

While most existing approaches for detection in videos focus on objects or human actions separately, we aim at jointly detecting objects performing actions, such as cat eating or dog jumping. We introduce an end-to-end multitask objective that jointly learns object-action relationships. We compare it with different training objectives, validate its effectiveness for detecting objects-actions in videos, and show that both tasks of object and action detection benefit from this joint learning. Moreover, the proposed architecture can be used for zero-shot learning of actions: our multitask objective leverages the commonalities of an action performed by different objects, e.g. dog and cat jumping , enabling to detect actions of an object without training with these object-actions pairs. In experiments on the A2D dataset [50], we obtain state-of-the-art results on segmentation of object-action pairs. We finally apply our multitask architecture to detect visual relationships between objects in images of the VRD dataset [24].

Fichier principal

main.pdf (5.68 Mo)

Télécharger le fichier

main.pdf (5.68 Mo)

Télécharger le fichier

net.jpg (118.42 Ko)

Télécharger le fichier

net.jpg (118.42 Ko)

Télécharger le fichier

| Origine | Fichiers produits par l'(les) auteur(s) |

|---|

| Format | Figure, Image |

|---|---|

| Origine | Fichiers produits par l'(les) auteur(s) |

Loading...