Estimation of Human Body Shape in Motion with Wide Clothing

Résumé

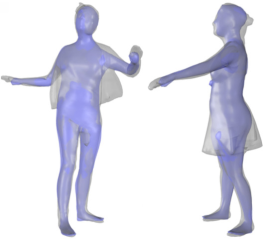

Estimating 3D human body shape in motion from a sequence of unstructured oriented 3D point clouds is important for many applications. We propose the first automatic method to solve this problem that works in the presence of loose clothing. The problem is formulated as an optimization problem that solves for identity and posture parameters in a shape space capturing likely body shape variations. The automation is achieved by leveraging a recent robust pose detection method Stitched Puppet. To account for clothing, we take advantage of motion cues by encouraging the estimated body shape to be inside the observations. The method is evaluated on a new benchmark containing different subjects, motions, and clothing styles that allows to quantitatively measure the accuracy of body shape estimates. Furthermore, we compare our results to existing methods that require manual input and demonstrate that results of similar visual quality can be obtained.

Fichier principal

Estimate of Human Body Shape in Motion with Wide Clothing.pdf (5.87 Mo)

Télécharger le fichier

Estimate of Human Body Shape in Motion with Wide Clothing.pdf (5.87 Mo)

Télécharger le fichier

tracking_under_clothes_teaser.jpg (27.9 Ko)

Télécharger le fichier

supplementaryVideo.mp4 (68.24 Mo)

Télécharger le fichier

tracking_under_clothes_teaser.jpg (27.9 Ko)

Télécharger le fichier

supplementaryVideo.mp4 (68.24 Mo)

Télécharger le fichier

| Origine | Fichiers produits par l'(les) auteur(s) |

|---|

| Format | Figure, Image |

|---|---|

| Origine | Fichiers produits par l'(les) auteur(s) |

| Format | Vidéo |

|---|---|

| Origine | Fichiers produits par l'(les) auteur(s) |

Loading...