Distance-Based Image Classification: Generalizing to new classes at near-zero cost

Résumé

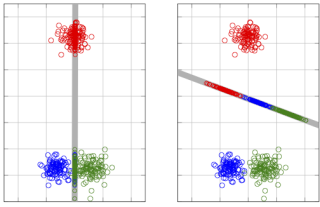

We study large-scale image classification methods that can incorporate new classes and training images continuously over time at negligible cost. To this end we consider two distance-based classifiers, the k-nearest neighbor (k-NN) and nearest class mean (NCM) classifiers, and introduce a new metric learning approach for the latter. We also introduce an extension of the NCM classifier to allow for richer class representations. Experiments on the ImageNet 2010 challenge dataset, which contains over 106 training images of 1,000 classes, show that, surprisingly, the NCM classifier compares favorably to the more flexible k-NN classifier. Moreover, the NCM performance is comparable to that of linear SVMs which obtain current state-of-the-art performance. Experimentally we study the generalization performance to classes that were not used to learn the metrics. Using a metric learned on 1,000 classes, we show results for the ImageNet-10K dataset which contains 10,000 classes, and obtain performance that is competitive with the current state-of-the-art, while being orders of magnitude faster. Furthermore, we show how a zero-shot class prior based on the ImageNet hierarchy can improve performance when few training images are available.

Fichier principal

mensink13pami.pdf (2.18 Mo)

Télécharger le fichier

mensink13pami.pdf (2.18 Mo)

Télécharger le fichier

mensink13pami.png (54.91 Ko)

Télécharger le fichier

mensink13pami.png (54.91 Ko)

Télécharger le fichier

| Origine | Fichiers produits par l'(les) auteur(s) |

|---|

| Format | Figure, Image |

|---|

Loading...