Lifted coordinate descent for learning with trace-norm regularization

Résumé

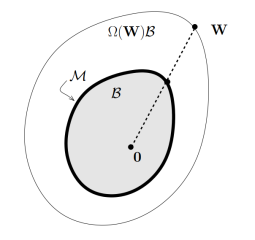

We consider the minimization of a smooth loss with trace-norm regularization, which is a natural objective in multi-class and multi-task learning. Even though the problem is convex, existing approaches rely on optimizing a non-convex variational bound, which is not guaranteed to converge, or repeatedly perform singular-value decomposition, which prevents scaling beyond moderate matrix sizes. We lift the non-smooth convex problem into an infinitely dimensional smooth problem and apply coordinate descent to solve it. We prove that our approach converges to the optimum, and is competitive or outperforms state of the art.

Fichier principal

dhm_2012_rod_atomdescent.pdf (341.23 Ko)

Télécharger le fichier

dhm_2012_rod_atomdescent.pdf (341.23 Ko)

Télécharger le fichier

gauge.png (37.74 Ko)

Télécharger le fichier

gauge.png (37.74 Ko)

Télécharger le fichier

| Origine | Accord explicite pour ce dépôt |

|---|

| Format | Figure, Image |

|---|

Loading...