Large Scale Metric Learning for Distance-Based Image Classification

Résumé

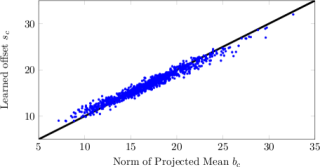

This paper studies large-scale image classification, in a setting where new classes and training images could continuously be added at (near) zero cost. We cast this problem into one of learning a low-rank metric, which is shared across all classes and explore k-nearest neighbor (k-NN) and nearest class mean (NCM) classifiers. We also introduce an extension of the NCM classifier to allow for richer image representations. Experiments on the ImageNet 2010 challenge dataset ---which contains more than 1M training images of 1K classes--- shows, surprisingly, that the NCM classifier compares favorably to the more flexible k-NN classifier. Moreover, the NCM performance suggests that 256 dimensional features is comparable to that of linear SVMs, which were used to obtain the current state-of-the-art performance. Experimentally we study the generalization performance to classes that were not used to learn the metrics, and show how a zero-shot model based on the ImageNet hierarchy can be combined effectively with small training datasets. Using a metric learned on 1K classes, we show results for the ImageNet-10K dataset, and obtain performance that is competitive with the current state-of-the-art, while requiring significant less training time.

Fichier principal

RR-8077.pdf (2.79 Mo)

Télécharger le fichier

RR-8077.pdf (2.79 Mo)

Télécharger le fichier

RR-8077.web.png (18.94 Ko)

Télécharger le fichier

RR-8077.web.png (18.94 Ko)

Télécharger le fichier

| Origine | Accord explicite pour ce dépôt |

|---|

| Format | Figure, Image |

|---|

Loading...